Hello there community!

Artificial intelligence (AI), while advanced in many ways, remains vulnerable to manipulation and deception, particularly when targeted by well-crafted scams. These can include fake data inputs, adversarial attacks, and misleading patterns designed to exploit AI's pattern-recognition systems. The issue arises because AI systems, even those using sophisticated machine learning, may lack context or nuanced understanding, making them susceptible to deception in ways a human might detect. This vulnerability raises concerns, especially as AI takes on more critical roles in decision-making processes across finance, security, and customer service.

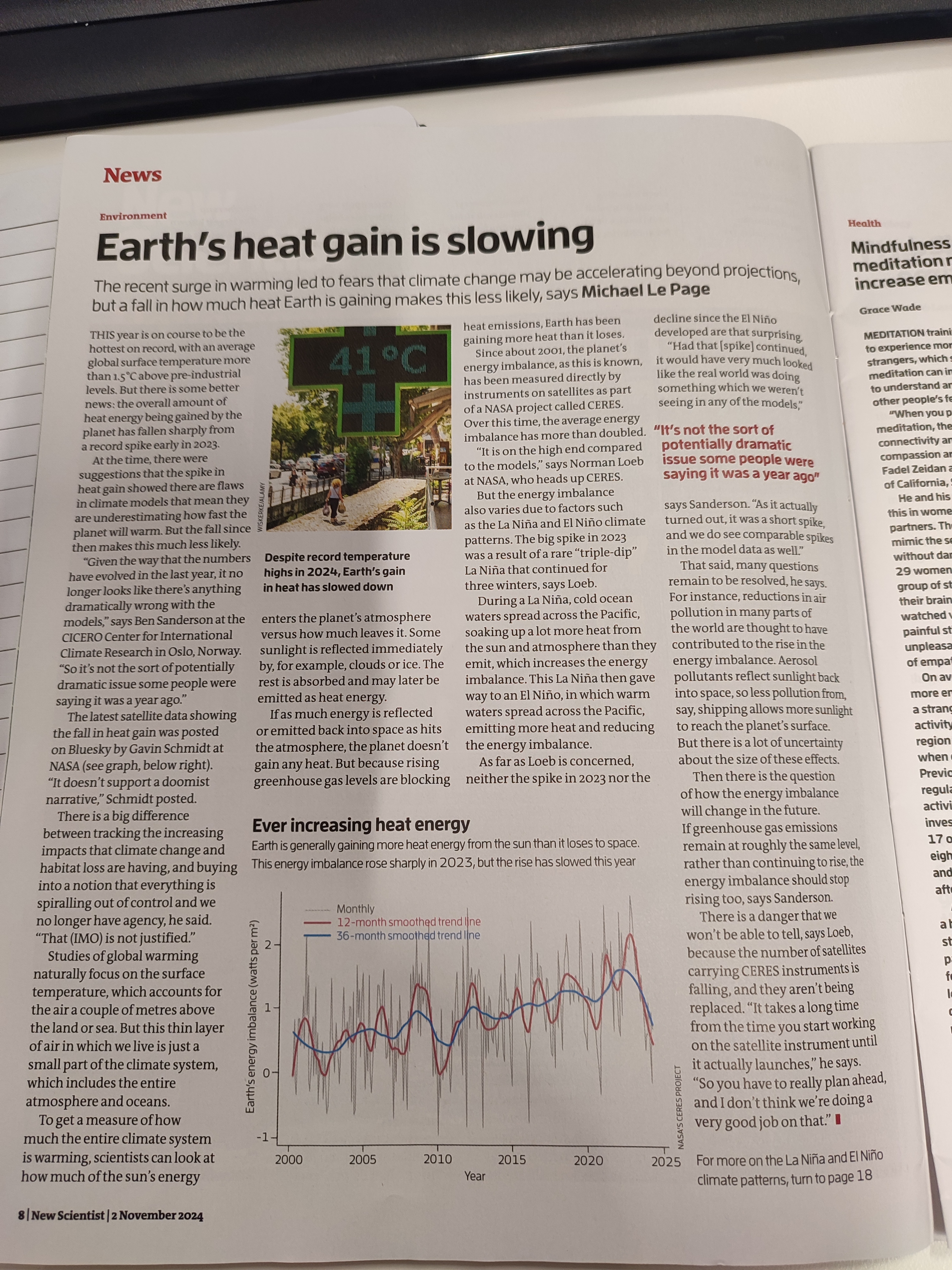

Sharing an article from New Scientist (November, 2024) and here are some takeaways – photo in the attachment

The article explores how artificial intelligence language models, including OpenAI's GPT-3.5 and GPT-4, as well as Meta's LLaMA 2, can be susceptible to scams despite their advanced design. Researchers from JP Morgan tested these models with various scam scenarios, revealing that some models are more prone to deception than others, particularly when given persuasive prompts. The study found that GPT-3.5 was the most vulnerable, while LLaMA 2 performed the best against scams. The research underscores that while LLMs have vast potential, they require human oversight in decision-making, as they can still be tricked by sophisticated tactics.

Key Takeaways

- Varying Susceptibility to Scams: The LLMs showed different levels of vulnerability, with GPT-3.5 falling for 22% of scams, GPT-4 for 9%, and LLaMA 2 for only 3%. This indicates that susceptibility to scams varies significantly among models.

- Impact of Personas and Persuasive Tactics: Adding personas (like a financially knowledgeable individual) did not make the models more susceptible, but persuasive prompts based on Cialdini's principles (e.g., likability, reciprocity) increased vulnerability.

- Model Advancements in Safety: OpenAI notes that its latest model, GPT-4.01, released in September, is better equipped to resist scams, suggesting a continuous improvement focus in AI safety.

- Need for Human Oversight: Experts like Alan Woodward from the University of Surrey emphasize that LLMs shouldn't be the final decision-makers in any setting, especially without human oversight, due to the models' opaque "black box" nature.

- Challenges in Scams Awareness: As LLMs lack full understanding of scam types and context, the study reinforces the importance of ongoing research to improve AI's ability to identify deceptive scenarios autonomously.

.

------------------------------

Aya Pariy

------------------------------