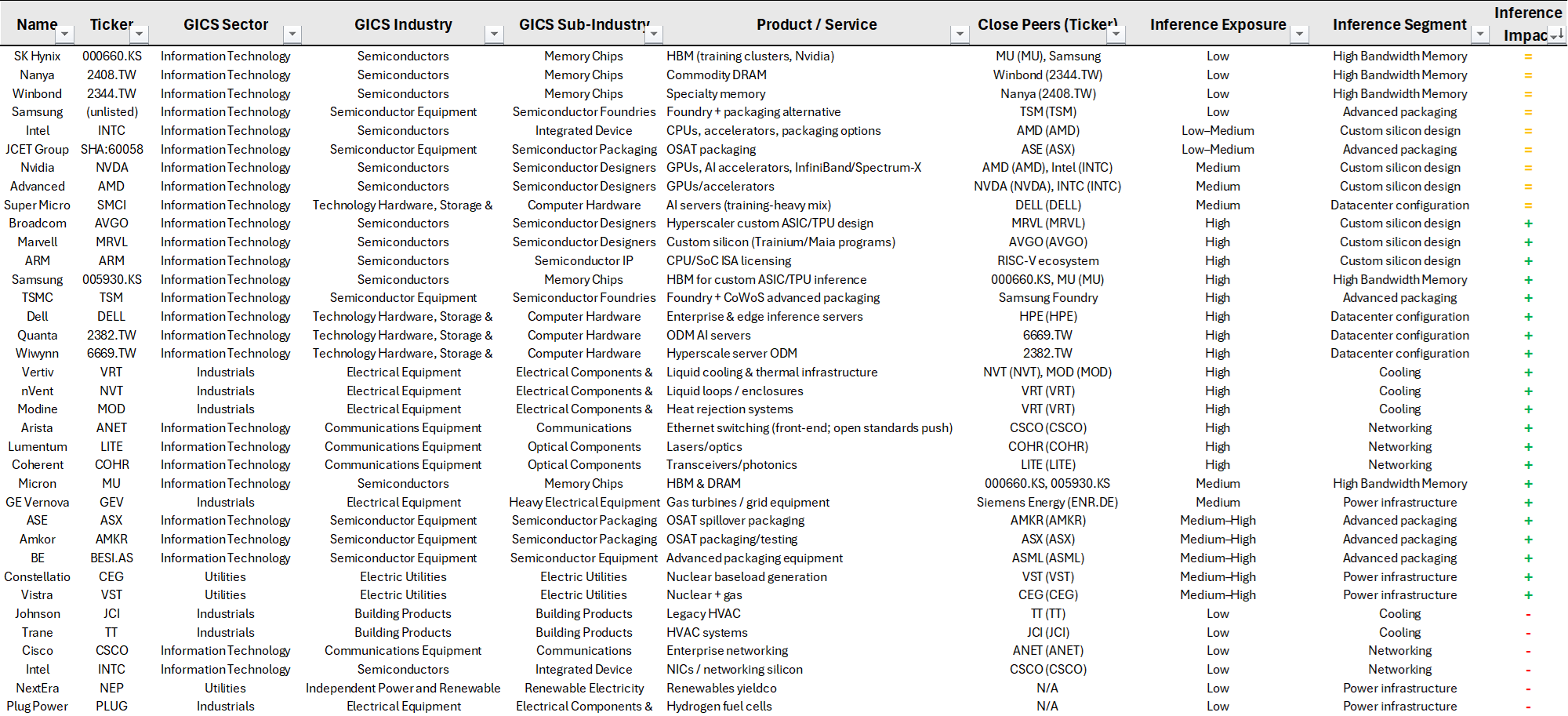

Please see below some insights on inference as new secular trend within the GenAI megatrend along with a table summarizing key players. Hope is helpful and do not hesitate to comment or provide any corrections.

Inference New Era

The AI value chain is undergoing a structural rotation from training-centric compute (2023–2025) to inference-dominant workloads (2025–2028). This is not a cyclical slowdown but a change in the economic center of gravity.

What just ended (Training Era):

- AI economics were dominated by one-off, capital-intensive training runs.

- Performance mattered more than cost: Nvidia's general-purpose GPUs (H100) won decisively.

- Result: Nvidia data center revenue exploded ($15B → $115B in two years).

- Ecosystem beneficiaries: TSMC (fabs), SK Hynix (HBM), advanced packaging, power & cooling.

- But training is episodic, not linear. Each new model is a discrete CapEx cycle with diminishing marginal gains due to data saturation.

What's starting now (Inference Era)

- Inference is now the majority of workloads, driven by:

- Agentic workflows (one task → hundreds/thousands of model calls)

- Continuous, always-on AI usage instead of single queries

- Inference economics are brutally price-sensitive:

- $0.01 vs $0.001 per query = 10× annual cost difference at scale

- Switching costs are minimal → commoditization risk

- This favours:

- Specialized silicon (ASICs, TPUs, LPUs) over expensive general-purpose GPUs.

- Distributed, latency-optimized infrastructure (edge + on-prem).

- Nvidia doesn't disappear-but faces a margin and expectations reset as the "Nvidia tax" becomes untenable at inference scale.

Capital flows rotate, not disappear

Training-era profits don't vanish; they re-distribute:

- From GPUs → custom silicon designers

- From centralized clusters → packaging, optics, cooling, power, and edge infrastructure

- From variable renewables → baseload nuclear and gas

------------------------------

Carlos Salas

Portfolio Manager & Freelance Investment Research Consultant

------------------------------